СДЕЛАЙТЕ СВОИ УРОКИ ЕЩЁ ЭФФЕКТИВНЕЕ, А ЖИЗНЬ СВОБОДНЕЕ

Благодаря готовым учебным материалам для работы в классе и дистанционно

Скидки до 50 % на комплекты

только до

Готовые ключевые этапы урока всегда будут у вас под рукой

Организационный момент

Проверка знаний

Объяснение материала

Закрепление изученного

Итоги урока

Рабочая тетрадь по английскому языку для студентов звукооператорского отделения к книге Роберта Тофта "Звукозапись классической музыки"

Данная рабочая тетрадь предназначена для занятий со студентами СПО отделения "звукооператорское мастерство" по английскому языку . Рабочая тетрадь содержит упражнения на проверку понимания содержания: вопросы с открытым ответом, "верно/неверно", множественный выбор. Упражнения распределены по главам и страницам книги. Ответы и глоссарий находятся в конце рабочей тетради. Упражнения можно использовать также в качестве заданий к зачёту.

Просмотр содержимого документа

«Рабочая тетрадь по английскому языку для студентов звукооператорского отделения к книге Роберта Тофта "Звукозапись классической музыки"»

РАБОЧАЯ

ТЕТРАДЬ

ДЛЯ ПРАКТИЧЕСКИХ РАБОТ ПО ДИСЦИПЛИНЕ

«ИНОСТРАННЫЙ ЯЗЫК(АНГЛИЙСКИЙ)» В РАЗДЕЛЕ

«общий гуманитарный и социально-экономический цикл»

53.02.08 «Музыкальное звукооператорское мастерство»

к книге Роберта Тофта «Звукозапись классической музыки», 2020

составитель преподаватель английского языка

Рябко Александра Кирилловна

Содержание

|

| стр |

| Пояснительная записка Методические указания по изучению дисциплины. |

3 |

| Глава 1 | 6 |

| Глава 2 | 8 |

| Глава 3 | 11 |

| Глава 4 | 13 |

| Глава 5 | 17 |

| Глава 6 | 19 |

| Глава 7 | 21 |

| Глава 8 | 23 |

| Глава 9 | 27 |

| Глава 10 | 32 |

| Глава 11 | 34 |

| Глава 12 | 36 |

| Глава 13 | 38 |

| Глава 14 | 39 |

| Глава 15 | 40 |

| Приложение 1. Глоссарий | 44 |

| Приложение 2. Ответы. | 55 |

Пояснительная записка

Рабочая тетрадь предназначена для практических работ по дисциплине «Иностранный язык» в разделе «общий гуманитарный и социально-экономический цикл»

по специальности 53.02.08 «Музыкальное звукооператорское мастерство». Основное назначение рабочей тетради – закрепить и активизировать языковой и речевой материал, автоматизировать лексико-грамматические навыки при работе с профессионально-ориентированными текстами. Данная рабочая тетрадь содержит комплекс упражнений, помогающим обучающимся совершенствовать навыки и умения самостоятельной работы со специальными текстами. Упражнения нацелены на быстрое и качественное запоминание профессиональных терминов, используемых по специальности «музыкальное звукооператорское мастерство», на базе профессионально-ориентированных текстов. Благодаря используемой системе упражнений данное пособие позволяет обучить студентов комплексу умений и навыков анализа смыслового содержания и логико-коммуникативной организации текста, необходимых как для полноты понимания читаемого, так и для его адекватного использования в речевой деятельности. Задания могут использоваться либо для выполнения домашних заданий, либо выступать в качестве заданий для повторения пройденного материала во время занятий

Рабочая тетрадь состоит из 15 разделов (Units) и двух приложений (Appendix 1), (Appendix 2). Материал каждого раздела (Unit) предусматривает последовательное, поэтапное изучение определенной темы, связанной с будущей профессиональной деятельностью обучающихся и принципов, применяемых в практике музыкального звукооператорского мастерства. В основу каждого урока положен принцип развития речевой деятельности: чтения и устной речи.

Приложение 1 (Appendix 1) включают словарь профессиональных терминов и глоссарий. Приложение 2 (Appendix 2) содержит ключи к упражнениям

Широкий спектр разнообразных практических заданий, организующих самостоятельную работу, требует от обучающихся творческого отношения при их выполнении (наличие заданий повышенной трудности- задания с развёрнутым ответом), позволяет реализовать личностно-ориентированный подход при работе с обучающимися в разным уровнем подготовки и с разными интересами. В тетрадь включены задания, готовящие обучающихся к объективному контролю и самоконтролю в процессе изучения английского языка.

Рабочая тетрадь соответствует уровню подготовки студентов по дисциплине «Иностранный язык (английский)» в разделе «общий гуманитарный и социально-экономический цикл» «Музыкальное звукооператорское мастерство».

Методические указания по изучению дисциплины.

В соответствии с ФГОС по дисциплине Иностранный язык (английский) для специальности 53.02.08 «Музыкальное звукооператорское мастерство». студент должен:

Требования к результатам освоения дисциплины

Студент по итогам изучения курса должен обладать рядом компетенций: осуществлять поиск и использование информации, необходимой для эффективного выполнения профессиональных задач, профессионального и личностного развития (ОК4); использовать информационно-коммуникационные технологии в профессиональной деятельности (ОК5); работать в коллективе и в команде, эффективно общаться с коллегами, руководством, потребителями (ОК6); брать на себя ответственность за работу членов команды (подчиненных), результат выполнения заданий (ОК7); самостоятельно определять задачи профессионального и личностного развития, заниматься самообразованием, осознанно планировать повышение квалификации (ОК8); ориентироваться в условиях частой смены технологий в профессиональной деятельности (ОК9).

В результате изучения дисциплины студент должен

-знать: лексический (1200-1400 лексических единиц) и грамматический минимум, необходимый для чтения и перевода (со словарем) иностранных текстов профессиональной направленности.

-уметь: -общаться (устно и письменно) на иностранном языке на профессиональные и повседневные темы; переводить (со словарем) иностранные тексты профессиональной направленности; самостоятельно совершенствовать устную и письменную речь, пополнять словарный запас.

-владеть: практическими навыками устной и письменной речевой деятельности на иностранном языке в процессе профессиональной деятельности.

- демонстрировать: способность и готовность: применять полученные знания на практике

Структура практических занятий включает в себя:

Exercises:

- пост-текстовые задания на проверку понимания содержания, стимулирующие развитие навыков на базе проблематики прочитанных текстов. Задания типа «множественный выбор», «верно/неверно» могут быть использованы для домашних заданий и беглого контроля понимания прочитанного; задания с развёрнутым ответом могут быть использованы для обсуждения в группе на занятии.

2. Приложение 1. (Appendix 1).Содержит языковой комментарий (Глоссарий), представляющий собой словарь с наиболее частотной лексикой и выражениями, встречающимися в сфере музыкального звукооператорского мастерства. Содержит лингвистический комментарий, объясняющий смысл основных профессиональных терминов.

Приложение 2. (Appendix2). Содержит ключи к упражнениям

Chapter 1

Page 3

1. What causes the compression and rarefaction

of air molecules?

A. Vibrating objects

B. Static objects

C. Sound waves

D. Light waves

2. What is a complete cycle of a sound wave

measured in?

A. Degrees

B. Meters

C. Seconds

D. Hertz

3. What shape does the simplest waveform, the sine wave, have?

A. Square

B. Triangle

C. Sine

D. Circle

4. What is the peak of a sound wave represented in degrees?

A. 90В°

B. 180В°

C. 270В°

D. 360В°

5. How do physicists usually represent soundwaves?

A. By drawings of air molecules

B. By undulating lines on a graph

C. By sound recordings

D. By visual art

Page 5 true/false

1. Instruments generate only a single frequency for each note, according to the document.

2. The fundamental frequency is the first harmonic of the series.

3. Complex waveforms with a recognizable harmonic timbre consist of a collection of sine waves that are not integer multiples of the fundamental frequency.

4. The sine waves described in the document have pitch but lack timbrel quality.

5. Noise is an example of complex waves with harmonic timbre.

Page 6

1. What is the mathematical relationship between overtones and the fundamental frequency on a vibrating string?

2. How does the harmonic series relate to musical notes on a staff?

3. What contributes to the characteristic timbre of musical instruments?

4. What examples are given to illustrate the differences in overtone emphasis between instruments?

5. What visual tools are mentioned for analyzing the overtone series of a violin note?

Page 9

1. What does the dotted line in Figure 1.11 represent?

A. Direct sound of the first wavefront

B. Early reflections

C. Late reflections

D. Reverberation

2. When do early reflections begin according to the document?

A. At 10 ms

B. At 30 ms

C. At 50 ms

D. At 80 ms

3. What happens to the reflections after about 80 ms?

A. They become distinguishable

B. They fade to silence

C. They become early reflections

D. They become direct sound

4. What do late reflections provide to the sound?

A. A sense of direction

B. A sense of fullness

C. A sense of distance

D. A sense of clarity

5. What does direct sound help listeners determine?

A. The size of the room

B. The location of the source

C. The fullness of the sound

D. The distance from the source

Page 9-10 true/ false

1. A concert hall must be quiet enough for very soft passages to be clearly audible.

2. Reverberation time is not an important factor in determining the quality of a performance venue.

3. Opera houses have the longest reverberation times compared to orchestral and chamber music venues.

4. Orchestral halls generally have reverberation times between 1.55 and 2.05 seconds.

5. Chamber halls have longer pre-delays than orchestral halls.

Page 11-12

1. What is the effect of early reflections in a reverberant space?

A. They create a sense of intimacy

B. They make the sound more diffuse

C. They have no effect on sound quality

D. They only affect larger halls

2. Why are rectangular-shaped performance spaces preferred according to Beranek?

A. They have more early reflections from nearby sidewalls

B. They are wider than other shapes

C. They have longer reverberation times

D. They are easier to construct

3. What is the ideal reverberation time (RT60) for choral music in large cathedrals?

A. 1.4 seconds

B. 2.5 seconds or more

C. 1.5 seconds

D. 0.5 seconds

4. What contributes to a sense of spaciousness in narrow shoebox-shaped rooms?

A. Abundance of early lateral reflections

B. Lack of reverberation

C. Presence of only late reflections

D. Smaller dimensions

5. What type of music benefits from narrower rectangular halls with strong early reflections?

A. Choral music

B. Jazz music

C. Eighteenth-century concertos and symphonies

D. Pop music

Chapter 2

Page 13

1. What does a microphone do in the audio chain?

A. It amplifies sound

B. It makes an electrical copy of sound waves

C. It converts digital signals to analog

D. It stores audio recordings

2. What is the purpose of an analog-to-digital converter (ADC)?

A. To amplify sound

B. To change voltage into a digital form

C. To transduce electrical current into sound waves

D. To store audio recordings

3. What does a digital-to-analog converter (DAC) do?

A. It converts digital signals into variations of voltage

B. It amplifies sound before it reaches the speakers

C. It makes an electrical copy of sound waves

D. It generates line-level output

4. What is the role of an amplifier in the audio chain?

A. To convert sound waves into electrical signals

B. To generate line-level output from a weak current

C. To transduce electrical current into sound waves

D. To store audio recordings

5. What happens to the audio signal as it travels along the audio chain?

A. It remains unchanged

B. It is amplified only

C. It may degrade due to multiple devices

D. It is stored digitally

Page 14

1. What does the term 'analog' refer to in the context of audio recording?

2. How does digital audio differ from analog audio in terms of signal representation?

3. What are the three components of Pulse Code Modulation (PCM) and what does each component do?

4. Who invented Pulse Code Modulation (PCM) and in what decade did this occur?

5. What does the term 'bit' stand for in digital audio systems, and what does it signify?

Page 15

1. What is the process of measuring the voltage of an electrical audio signal at regular intervals called?

A. Sampling

B. Aliasing

C. Reconstruction

D. Nyquist Theorem

2. What sampling rate is commonly used for CD distribution?

A. 40k

B. 44.1k

C. 48k

D. 96k

3. According to the Nyquist theorem, how often must measurements be taken to accurately recreate an analog signal?

A. At least once per second

B. At least twice the highest frequency

C. At least four times the highest frequency

D. At least half the sampling rate

4. What occurs when sampling rates are below the minimum dictated by the Nyquist theorem?

A. Reconstruction

B. Aliasing

C. Sampling

D. Filtering

5. What is the upper limit of human hearing in kHz?

A. 10 kHz

B. 20 kHz

C. 30 kHz

D. 40 kHz

Page 15 true false

1. A group of eight digits is called a byte.

2. 24-bit audio allows for a dynamic range of 120 dB.

3. The maximum frequency a digital system can represent is equal to the sampling rate.

1. Sampling is the process of measuring the voltage of an electrical audio signal at irregular intervals.

2. The Nyquist theorem states that measurements must be taken at a rate twice the highest frequency in the signal.

3. A sampling rate of 44.1k is sufficient for accurately reconstructing waveforms for human hearing.

4. Aliasing occurs when too many samples are taken of a signal.

5. The minimum sampling rate required for the upper limit of human hearing is 40k.

Page 16 true false

1. Sampling imposes a continuous flow of data on a signal.

2. Quantization introduces errors into the system that can be heard as nonrandom noise.

3. A 3-bit scale has eight possible steps.

4. Dithering is used to reduce the audibility of errors in an audio signal.

5. The addition of random noise to a signal can replace nonrandom distortion with a more pleasing noise spectrum.

Page 16

1. What is the effect of quantization on a digital signal?

2. How does the number of bits in a scale affect the rounding error?

3. What is the purpose of dithering in audio processing?

4. What happens during the process of requantization?

5. Why is random noise preferred over nonrandom noise in audio signals?

Page 16

1. What does sampling impose on a signal?

A. A succession of discrete measurement points

B. A continuous flow of data

C. An infinite number of values

D. A random selection of points

2. What is the effect of quantization on a signal?

A. It introduces errors into the system

B. It enhances the signal quality

C. It eliminates noise completely

D. It increases the number of measurement points

3. What is the purpose of dithering in audio processing?

A. To add random noise to the signal

B. To reduce the bit depth without distortion

C. To increase the amplitude of the audio

D. To eliminate all rounding errors

4. How many steps does a 16-bit scale have?

A. 65,536 steps

B. 256 steps

C. 16 steps

D. 4 steps

5. What happens when the amplitude of audio falls to its lowest levels?

A. The relative size of the error becomes larger

B. The audio becomes inaudible

C. The quantization error disappears

D. The noise becomes random

Page 17

1. What is one of the commonly used types of dither mentioned in the document?

A. TPDF B. MP3 C. WAV D. AIFF

2. What does noise shaping concentrate on according to the document?

A. Lower frequencies

B. Higher frequencies

C. Mid frequencies

D. All frequencies

3. What is the perceived dynamic range of 16-bit audio according to the document?

A. 96.0 dB

B. 118.0 dB

C. 120.0 dB

D. 80.0 dB

4. What is the main benefit of 24-bit audio production mentioned in the document?

A. Lower noise floor

B. Higher sample rate

C. Better sound quality

D. Easier editing

5. What can mask any improvements from dithering in a recording chain?

A. High levels of noise

B. Low bit depth

C. High sample rate

D. Poor quality equipment

Page 17 true false

1. TPDF is a commonly used type of dither.

2. Noise shaping increases the noise level in the audible frequency range.

3. The dynamic range of 16-bit audio is 118.0 dB.

4. Dithering is applied as the last stage of preparing tracks for delivery.

5. A 16-bit noise floor is above what humans can hear.

Page 17

1. What is TPDF and how does it relate to audio dithering?

2. What is the purpose of noise shaping in audio production?

3. How does increasing the bit depth from 16 to 24 bits affect audio quality?

4. What is the significance of the noise floor in audio recording?

5. What precautions should engineers take when applying dither to audio tracks?

Page 18 True/false

1. A DAC converts analog signals to digital code.

2. The sound quality of digital audio depends on sample rate and bit depth.

3. Higher sample rates decrease the frequency bandwidth devices can encode.

4. High-resolution audio has a bit depth of at least 24.

5. The DAC sends the waveform through a reconstruction filter to smooth the signal.

Chapter 3

Page 23 True/ False

1. A microphone converts soundwaves from mechanical energy to electrical energy.

2. The diaphragm of a microphone responds to pressure differences between its front and back sides.

3. Soundwaves arriving at the edge of the diaphragm cause it to move.

4. A freely suspended diaphragm produces a bidirectional pickup pattern.

5. Listeners hear an accurate representation of the sound source when the microphone signal is not replicated faithfully.

Pages 25-26

1. What type of pickup pattern does a single diaphragm suspended between two points naturally exhibit?

A. Unidirectional

B. Bidirectional

C. Omnidirectional

D. Cardioid

2. What shape does the cardioid pickup pattern resemble?

A. Circle

B. Square

C. Heart

D. Triangle

3. What happens to the signals generated by the two capsules at the rear of the microphone?

A. They amplify each other

B. They cancel each other

C. They create a feedback loop

D. They are ignored

4. What is the primary function of combining a bidirectional mic with a unidirectional mic?

A. To create a cardioid pattern

B. To increase sound quality

C. To reduce weight

D. To enhance bass response

5. What does the Omni portion of the mic respond to?

A. Sound from the front

B. Sound from the rear

C. Sound from the sides

D. Sound from all directions

Pages 24-25 Questions:

1. What is the basic operating principle of condenser microphones?

2. How does a pressure transducer microphone respond to sound waves?

3. What materials are commonly used for the diaphragm in pressure transducer microphones?

4. What is the purpose of the small holes in the backplate of a pressure transducer?

5. How do manufacturers compensate for high-frequency loss when using omnidirectional microphones in a reverberant sound field?

Page 27 (Figure. 3.6 ) True/False

1. Today's cardioid microphones use principles of acoustic delay to achieve the same result from a single capsule.

2. The first cardioid microphones contained a single capsule.

3. Manufacturers place a delay network behind the diaphragm in cardioid microphones.

4. Acoustic resistance can be used to achieve the desired delay in cardioid microphones.

5. Figure 3.6 shows a complex device with multiple entry slots for sound.

Pages 27-28 Questions:

1. What is the purpose of introducing a delay in capsules for microphones?

2. What are the acceptance angles for cardioid, supercardioid, and hypercardioid microphones?

3. How do microphone designers define an acceptance angle?

4. What is the significance of locating sound sources within a microphone's pickup angle?

5. What is the function of dual diaphragm capsules in microphones?

Pages 28-29

1. What principle do dynamic and ribbon microphones operate on?

A. Electromagnetic induction

B. Capacitive coupling

C. Optical sensing

D. Piezoelectric effect

2. What type of pattern do dynamic microphones usually have?

A. Omnidirectional

B. Cardioid

C. Bidirectional

D. Supercardioid

3. What material is used for the diaphragm in ribbon microphones?

A. Plastic

B. Copper

C. Corrugated aluminum

D. Steel

4. What do ribbon microphones require before their signal can be used in an audio chain?

A. Minimal amplification

B. Considerable amplification

C. No amplification

D. Battery power

5. What is a characteristic of the diaphragm in ribbon microphones?

A. High mass

B. Low mass

C. Large size

D. Thick material

Chapter 4

Page 31 True/False

1. Microphones ideally should respond equally well to all frequencies across the normal range of human hearing, which is 20-20,000 Hz.

2. Manufacturers find it easy to attain a flat frequency response in large spaces.

3. In a diffuse field, rooms tend to absorb treble frequencies.

4. Microphone designers often boost the sensitivity of omnidirectional mics by 2.0-4.0 dB in the region of 10 kHz.

5. The typical response of a microphone shows a sensitivity boost centered on 20 kHz.

Pages 32-33 Questions:

1. What does the polar response of a microphone indicate?

2. How do omnidirectional microphones respond to sound from different directions?

3. What happens to the sensitivity of omnidirectional microphones at higher frequencies?

4. What are the characteristics of cardioid microphones?

5. How do supercardioid and hypercardioid microphones differ from cardioid microphones?

Page 35 True/False

1. All microphones that work on a pressure-gradient principle exhibit proximity effect.

2. The proximity effect is particularly noticeable at distances greater than 30 centimeters.

3. The Inverse Square Law states that sound intensity decreases proportionally to the square of the distance from the source.

4. Sound pressure reduces by half for every doubling of the distance from the source.

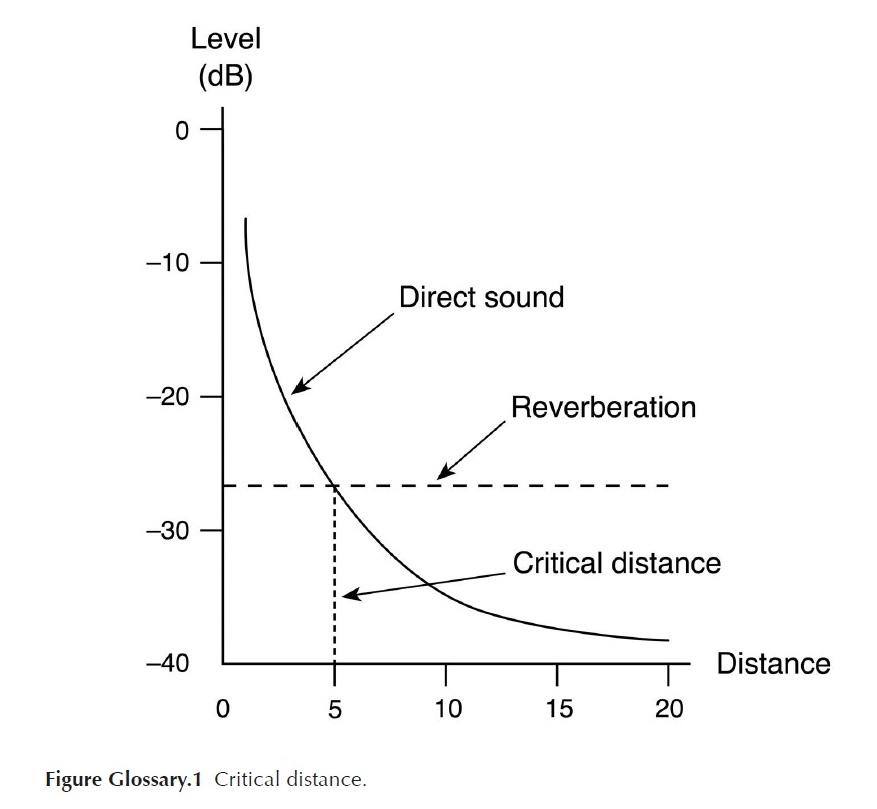

5. The critical distance is the point in a room where direct sound and reverberation are equal in level.

Pages 32 -33

1. What does the polar response of a microphone indicate?

A. Its sensitivity to sounds arriving from any location

B. Its ability to capture sound only from the front

C. Its frequency response range

D. Its physical size

2. What is the typical response pattern of omnidirectional microphones at lower frequencies?

A. A perfect circle C. A wide lobe

B. A narrow band D. A figure-eight pattern

3. At what frequency does the omnidirectional microphone begin to exhibit normal narrowing of response?

A. 2.0 kHz C. 8.0 kHz

B. 4.0 kHz D. 12.5 kHz

4. What type of microphone features a reasonably wide pickup area at the front and a large null at the rear?

A. Omnidirectional

B. Cardioid

C. Supercardioid

D. Bidirectional

5. What do supercardioid and hypercardioid microphones do compared to cardioid microphones?

A. They have a wider pickup area

B. They restrict the width of their front lobes

C. They capture sound equally from all directions

D. They have no null regions

Pages 34-35 Questions:

1. What is the Random Energy Efficiency (REE) of omnidirectional microphones and how is it used as a reference for directional microphones?

2. How do bi-directional and cardioid microphones compare in terms of their REE and what does this indicate about their sound pickup?

3. What is the distance factor of a cardioid microphone and what does it imply about its placement relative to an omnidirectional microphone?

4. What effect does the distance between a microphone and its sound source have on the recording character?

5. What is the critical distance in microphone placement and why is it significant?

Pages 36-37 Questions:

1. What role does the Inverse Square Law play when a microphone is positioned close to a sound source?

2. How does the distance from the sound source affect the sound pressure level on the rear side of the diaphragm?

3. What discrepancies in sound pressure level were determined by John Woram for a microphone placed at different distances from the sound source?

4. What is the effect of proximity on sound pressure levels for lower frequencies compared to higher frequencies?

5. What does Figure 4.9 illustrate regarding the proximity effect in a cardioid microphone?

Pages 37-39 Phase

1. What does the term 'phase' refer to in the context of a periodic wave?

A. The starting position of a wave in its cycle

B. The frequency of the wave

C. The amplitude of the wave

D. The speed of the wave

2. What occurs when the peaks of one signal coincide with the troughs of another?

A. Constructive interference

B. Destructive interference

C. Phase alignment

D. Wave amplification

3. What is the result when two soundwaves arrive at a microphone at the same time?

A. Destructive interference

B. Constructive interference

C. Phase cancellation

D. Sound distortion

4. What is comb filtering a result of?

A. Phase alignment of frequencies

B. Mathematically related cancellations and reinforcements

C. Increased amplitude of soundwaves

D. Frequency modulation

5. What happens to the amplitude of a frequency when it arrives 'in phase' at two microphones?

A. It decreases

B. It remains the same

C. It doubles

D. It cancels out

Pages 39-40 Questions:

1. What is the 'three-to-one' principle in microphone placement, and how does it help in reducing comb filtering effects?

2. What is comb filtering, and how does it affect sound quality?

3. How does the time delay between microphones influence the audibility of comb filtering?

4. What amplitude differences can occur when two microphones have identical output levels, and how can this be mitigated?

5. What is the recommended attenuation level at one microphone to make comb filtering tolerable for most listeners?

Page 40-41 True-false

1. Engineers can minimize the negative effect of distortion by reducing the difference in amplitude between the peaks and troughs caused by phase shifts.

2. An attenuation of 9.0 to 10.0 dB at one of the microphones can decrease amplitude differences to about 4.0 dB.

3. Comb filtering becomes tolerable for most listeners at amplitude differences greater than 4.0 dB.

4. The 'three-to-one' principle involves placing two microphones at equal distances from a sound source.

5. The testing procedure for the 'three-to-one' principle was carried out in an anechoic chamber.

Page 41

1. What distance from the on-axis mic did noticeable reinforcements and cancellations occur when the second transducer was located?

A. 2 feet (61 centimeters)

B. 4 feet (122 centimeters)

C. 10 feet (305 centimeters)

D. 6 feet (183 centimeters)

2. At what distance did the combined signals parallel the on-axis response of mic number one?

A. 2 feet (61 centimeters)

B. 4 feet (122 centimeters)

C. 10 feet (305 centimeters)

D. 6 feet (183 centimeters)

3. What was the maximum distance at which skilled listeners did not notice audible improvements in sound quality?

A. 2 feet (61 centimeters)

B. 4 feet (122 centimeters)

C. 6 feet (183 centimeters)

D. 10 feet (305 centimeters)

4. What ratio between microphones was found to avoid noticeable phase interference?

A. One-to-one

B. Two-to-one

C. Three-to-one

D. Four-to-one

5. What is the distance in centimeters for 10 feet?

A. 305 centimeters

B. 244 centimeters

C. 183 centimeters

D. 61 centimeters

Page 42-43 Questions

1. What is the recommended difference in decibels (dB) between the primary sound source and the background sounds picked up by a microphone in a multiple microphone setup?

2. What principle should recordists follow to maintain sufficient phase integrity in concert or recital hall locations?

3. How can recordists check the attenuation of a microphone during rehearsal?

4. What is the function of the Auto Align plugin in audio recording?

5. What should engineers do in situations where close miking and signal splitting are required?

Pages 42-43

1. What is the recommended difference in dB between microphones in a multiple microphone setup?

A. 5.0 to 6.0 dB

B. 7.0 to 8.0 dB

C. 9.0 to 10.0 dB

D. 11.0 to 12.0 dB

2. What principle should recordists follow to maintain sufficient phase integrity?

A. Two-to-one principle

B. Three-to-one principle

C. Four-to-one principle

D. Five-to-one principle

3. What does the Auto Align plugin do?

A. Enhances the sound quality

B. Analyzes pairs of signals for time delay

C. Records audio signals

D. Adjusts microphone placement

4. What should the level at the remaining microphone drop by when the performer stops playing?

A. At least 5.0 dB

B. At least 7.0 dB

C. At least 9.0 dB

D. At least 11.0 dB

5. What does the Auto Align plugin allow engineers to enhance?

A. The volume of the audio

B. The sense of space

C. The clarity of the audio

D. The pitch of the audio

Chapter 5

Pages 45-46

1. What is the optimum angle for stereo perception according to the document?

A. 15В°

B. 30В°

C. 45В°

D. 60В°

2. What two cues do listeners use to locate the origin of sounds?

A. Frequency and amplitude

B. Intensity and time of arrival

C. Pitch and loudness

D. Volume and distance

3. What is required for listeners to perceive sounds as coming from various points along the horizontal plane?

A. No level differences

B. Level and time discrepancies

C. Only time differences

D. Only level differences

4. What happens if the outer instruments lie beyond the pickup angle in stereo miking?

A. They will be localized properly

B. They will not be localized properly

C. They will create a phantom image

D. They will enhance the stereo effect

5. What is the effect of a difference of 15.0 to 20.0 dB or a delay of about 1.5 ms?

A. It causes the phantom image to shift to one of the speakers

B. It creates a stronger stereo effect

C. It eliminates the need for microphones

D. It reduces sound pressure level

Pages 45-46 Questions:

1. What is the significance of phase integrity in stereo recording?

2. How do listeners use intensity and time of arrival to locate sounds?

3. What is the optimum angle for stereo perception and why is it important?

4. What happens when there are no level or time differences in a stereo playback system?

5. How does stereo miking utilize level and time-of-arrival differences?

Pages 47-48

1. What is the purpose of using coincident pairs of microphones?

A. To eliminate time lags between microphones

B. To increase the volume of sound

C. To reduce background noise

D. To capture sound from multiple directions

2. What type of microphones are generally used in an X-Y configuration?

A. Omnidirectional microphones

B. Dynamic microphones

C. Cardioid microphones

D. Condenser microphones

3. What angle is commonly used between microphones in an X-Y configuration?

A. 60В° to 90В°

B. 90В°

C. 80В° to 130В°

D. 45В°

4. What effect does the X-Y configuration have on the stereo sound stage?

A. Creates a narrow sound stage

B. Produces a strong sense of lateral spread

C. Eliminates all background noise

D. Only captures sound from the center

5. What is the advantage of using omni pairs in X-Y configurations?

A. They create a stronger central image

B. They maintain a stable image when a soloist moves

C. They capture sound from a wider angle

D. They are less sensitive to off-axis sounds

Pages 48-49 Questions:

1. What is the Blumlein technique and who developed it?

2. How does the Blumlein technique create a phantom image during playback?

3. What happens to the output of the microphones when sound sources are placed to the right or left of the 0В° axis?

4. What are the advantages of positioning the Blumlein microphones within the critical distance in a hall?

5. What is one disadvantage of the Blumlein technique related to side reflections?

Pages 48-49 Questions:

1. Who developed the Blumlein technique?

A. Thomas Edison

B. Alan Blumlein

C. Leonardo da Vinci

D. Nikola Tesla

2. What angle are the microphones set at in the Blumlein technique?

A. 45В°

B. 90В°

C. 180В°

D. 30В°

3. What does the Blumlein technique primarily capture during playback?

A. Direct sound only

B. Ambient sound only

C. A strong phantom image

D. Only lateral reflections

4. What is a disadvantage of the Blumlein technique?

A. It captures only direct sound

B. It requires expensive equipment

C. It may result in a 'hollow' sound quality

D. It cannot be used in live settings

5. What happens to the output of the microphones when sound sources are placed at a 45В° angle?

A. Both microphones increase output

B. One microphone reaches maximum output while the other is zero

C. Both microphones remain silent

D. The output is equal for both microphones

Pages 49-50 True/ False

1. The Mid-Side (M/S) technique was developed in the 1950s by Holger Lauridsen of Danish State Radio.

2. A cardioid microphone captures the sides of the ensemble in the M/S technique.

3. The M/S technique allows engineers to manipulate the two signals to change the width of the stereo sound stage.

4. In the M/S technique, the signal from the side microphone is sent to a single channel panned center.

5. More side signal in the M/S technique broadens the stereo sound stage.

Pages 51-52 True/ False

1. The ORTF technique uses two cardioid microphones angled at 90В°.

2. The NOS configuration separates the microphone capsules by 30 centimeters.

3. The ORTF technique was developed by the Nederlandse Omroep Stichting.

4. Comb filtering occurs at frequencies above 1 kHz in the ORTF technique.

5. The NOS technique is more useful for monophonic broadcasting than the ORTF technique.

Pages 51 -52 Questions:

1. What is the purpose of using near-coincident arrays in audio recording?

2. Describe the ORTF microphone configuration and its significance.

3. What are the characteristics of the sound produced by the ORTF technique at different frequencies?

4. Explain the NOS microphone configuration and its limitations.

5. What do recordists appreciate about the ORTF array according to Bruce and Lenny Bartlett ?

Chapter 6

Page 59-60

1. What is the primary focus of critical listening during the recording process?

A. Evaluating sound quality

B. Choosing instruments

C. Editing lyrics

D. Selecting a recording studio

2. Which of the following is NOT one of the criteria for assessing audio signals?

A. Transparency

B. Timbre

C. Volume

D. Stereo Image

3. What does the term 'Loudness' refer to in the context of audio recording?

A. The clarity of details

B. The dynamic range of the music

C. The balance of sound sources

D. The tonal characteristics of sound

4. What aspect of sound does 'Spatial Environment' assess?

A. The clarity of lyrics

B. The ambience for sound sources

C. The volume of the recording

D. The type of instruments used

5. What is the goal of removing extraneous noise from a recording?

A. To enhance the stereo image

B. To distract listeners

C. To improve communication of performers' emotion

D. To increase the volume of the track

Pages 59-60 Questions:

1. What are the key criteria for assessing audio signals during the recording process according to the European Broadcasting Union?

2. How does the perception of room ambience affect the recording of soloists or ensembles?

3. What role does transparency play in critical listening during the recording process?

4. Why is it important for engineers to ensure the intelligibility of individual elements in a recording?

5. What is the significance of dynamic range in a music recording?

Page 60 true false

1. Clipping occurs in digital systems when the signal exceeds the 0.0 dBFS threshold.

2. Analog consoles produce noticeable distortion only when the input signal exceeds the nominal 0.0 dBu level by 10.0 decibels.

3. Recordists should set their levels from the loudest passages to avoid clipping.

4. Approximately 20.0 dB of headroom is recommended to prevent peak levels from causing distortion in digital systems.

5. The noise floor of 24-bit systems can remain 90.0 to 100.0 dB below the average signal level.

Pages 61-62

1. What is the critical distance in a room?

A. The distance where sound is completely absorbed

B. The distance where only direct sound is captured

C. The distance where only reverberation is captured

D. The distance where direct and reverberant sound are equal

2. What does an SPL meter help engineers find?

A. The best recording software

B. The type of microphones to use

C. The critical distance of a room

D. The volume of the sound source

3. What is the effect of doubling the distance from the sound source in the free field?

A. The level of direct sound drops by 6.0 dB

B. The level of reverberated sound increases

C. The sound becomes clearer

D. The sound is completely absorbed

4. What is the purpose of placing microphones close to the sound source?

A. To capture clarity of detail

B. To capture only reverberation

C. To eliminate all sound

D. To create a louder sound

5. What do engineers do if a single stereo pair of microphones does not produce satisfactory results?

A. Stop recording

B. Use two pairs of microphones

C. Change the recording location

D. Use a different type of instrument

Pages 61-62 Questions:

1. What factors influence the placement of microphones in relation to room ambience during tracking?

2. How do engineers determine the critical distance in a room?

3. What is the significance of the sweet spot in microphone placement?

4. What is the third option for microphone placement mentioned in the document, and what does it require?

5. What considerations do recordists take into account when deciding on the perspective to present to listeners?

Chapter 7

Pages 65-66

1. What is the purpose of an equalizer in audio editing?

A. To boost low frequencies

B. To block all frequencies

C. To modify the frequency content of signals

D. To enhance the volume of tracks

2. What type of filter allows frequencies above a cut-off point to pass?

A. High-pass filter

B. Low-pass filter

C. Band-pass filter

D. Notch filter

3. What is the cut-off frequency defined as?

A. The point where all frequencies are blocked

B. The point at which the response is 3.0 dB below the nominal level

C. The maximum frequency allowed through the filter

D. The minimum frequency allowed through the filter

4. What does a drop of 6.0 dB per octave indicate?

A. A steep attenuation slope

B. A gentle attenuation slope

C. No attenuation

D. Complete signal loss

5. In a band-pass filter, what type of frequencies are allowed to pass?

A. All frequencies

B. Only frequencies above a certain point

C. Only low frequencies

D. Only frequencies within a defined range

Pages 65-66

1. What historical issue in telephonic communication led to the development of equalizers?

2. How do audio editors use equalization (EQ) in their work?

3. What are the different types of digital filters mentioned in the document, and how are they classified?

4. What is the significance of the cut-off frequency in a filter?

5. What is the difference between a gentle and a steep attenuation slope in filters?

Pages 67-68 true false

1. Shelf filters can only cut frequencies and cannot boost them.

2. Parametric filters provide flexibility in shaping the frequency content of soundwaves.

3. The Pro-Q2 plugin allows users to incorporate other types of filters beyond the three main adjustable parameters.

4. A gain change of -3.0 dB was applied to one of the center frequencies in the Pro-Q2 filter example.

5. Shelf filters do not allow any frequencies to pass through.

Pages 68-69

1. What does the Q factor refer to in audio engineering?

A. The degree of alteration affecting neighboring frequencies

B. The maximum gain change applied to a frequency

C. The bandwidth of a cut or boost

D. The center frequency of a track

2. What is the effect of a high-pass filter as described in the document?

A. It boosts low frequencies

B. It alters the frequency balance by up to 12.0 dB

C. It adds warmth around 100 Hz

D. It removes rumble in the low end

3. How can audio engineers shape frequency areas according to the document?

A. By using only high-pass filters

B. Through bell-style curves and incorporating pass and shelf filters

C. By adjusting the volume of the track

D. By limiting the number of frequency bands used

4. What does a narrower Q setting affect according to the document?

A. It spreads the change over a larger bandwidth

B. It restricts the range of frequencies affected

C. It increases the maximum gain change

D. It has no effect on the neighboring frequencies

5. What is the maximum gain change that can be applied in Voxengo's Marvel CEQ?

A. 10.0 dB

B. 15.0 dB

C. 12.0 dB

D. 20.0 dB

Page 70 Questions:

1. What is the purpose of a high-pass filter in audio mixing?

2. How can a shelf filter for higher frequencies enhance the audio mix?

3. What is the significance of sweeping in audio mixing?

4. Why is the 2-5 kHz frequency range important in audio mixing?

5. What did the research by Fletcher and Munson reveal about human hearing and frequency perception?

Chapter 8

Page 73 Questions:

1. What was the original purpose of compressors in audio engineering?

2. How do modern compressors differ from the manual methods used by engineers before their invention?

3. What are the three main stages of a compressor?

4. What user-adjustable features are found in the gain-control stage of a compressor?

5. Why do engineers apply make-up gain after the compression process?

Pages 73-75

1. What does the Peak/RMS switch in a compressor allow users to choose?

A. The way the plugin detects the level of a signal

B. The type of audio file to compress

C. The amount of gain reduction

D. The frequency range to compress

2. What does RMS stand for?

A. Real Mean Sound

B. Root Mean Square

C. Relative Maximum Signal

D. Random Mean Square

3. What happens when the signal rises above the threshold in a compressor?

A. The threshold is adjusted

B. The compressor stops working

C. The signal is amplified

D. The compressor automatically lowers the level

4. According to some engineers, how do instruments and voices sound better when compressors respond to?

A. Averaged loudness

B. Peak values

C. RMS values only

D. Average loudness only

5. What does the threshold set in a compressor?

A. The minimum signal level

B. The maximum gain allowed

C. The level at which the compressor begins to reduce amplitude

D. The frequency response of the compressor

Pages 75-77 true false

1. The compression ratio is determined by the amount of gain reduction that occurs when a signal exceeds the threshold.

2. A 2:1 compression ratio means that a 2.0 dB increase in input level results in a 1.0 dB increase in output level.

3. At a 4:1 ratio, an 8.0 dB increase in input level produces an output rise of 2.0 dB.

4. A ratio of 8:1 means that every 8.0 dB above the threshold at input is reduced to 1.0 dB above the threshold at output.

5. At ratios above 10:1, the plugin effectively becomes a brickwall limiter.

Pages 77-78 Questions:

1. What is the role of the attack control in a compressor?

2. How does the release control affect the compressor's output?

3. What is the difference between soft knee and hard knee in a compressor?

4. What is the purpose of the final gain control in a compressor?

5. How does the hold control function in a compressor?

Page 79 true false

1. Limiters allow audio below a specified decibel level to pass freely while attenuating peaks that cross a user-defined threshold.

2. Brickwall limiters have a ratio of less than 10:1.

3. The true-peak limiter ISL 2 measures true peaks and allows engineers to set a maximum decibel level for signals.

4. Users can adjust the amplitude of the incoming signal through the Input Gain box in the ISL 2 plugin.

5. The TPLim box is used to select a target for the inter-sample peak limit of the signal in the ISL 2 plugin.

Pages 80-81 true false

1. The GUI displays the amount of gain reduction applied to each channel in dBTP.

2. The history graph shows only the input level of the audio signal.

3. When only one side triggers the limiter, gain reduction is applied to both channels equally.

4. The meter indicates the degree of steering that has occurred when limiters are employed independently on the two stereo channels.

5. Ducking occurs when one signal is raised above another.

Pages 81-82

1. What does the Look Ahead box allow engineers to specify?

A. The amount of gain reduction

B. How much time the limiter should react to the entering signal

C. The output level in dBTP

D. The target bit depth

2. What does the Release function establish?

A. How quickly the limiter returns to no gain reduction

B. The amount of independence of the limiters

C. The target bit depth

D. The type of white noise used

3. What does the Auto button do in the plugin?

A. It analyzes the incoming audio signal for low-frequency information

B. It sets the target bit depth

C. It adjusts the output according to the amount of Input Gain

D. It allows users to hear only the difference between input and output

4. What does the Dither box set?

A. The degree of independence of the limiters

B. The amount of gain reduction

C. The nature of the noise shaping

D. The target bit depth

5. What does the Shaping function specify?

A. The target bit depth

B. The nature of the noise shaping

C. The amount of independence of the limiters

D. The output level in dBTP

Page 82 true false

1. The bx_dynEQ is a static EQ that does not change its gain settings dynamically.

2. Compression can be applied in conjunction with an EQ filter according to the document.

3. Traditional static EQs are suitable for intermittent correction of frequency problems.

4. The bx_dynEQ can assist engineers in applying gain reduction to user-defined frequency bands.

5. Brainwork has developed the bx_dynEQ software for dynamic EQ filtering.

Pages 83-84

1. What type of graphical interface does Sonnox use for their Oxford Dynamic EQ?

A. Replicates hardware control knobs

B. Graphical interface similar to parametric EQ plugins

C. Text-based interface

D. Analog-style interface

2. How many distinct frequency bands can be applied in the Oxford Dynamic EQ?

A. Three B. Four C. Five D. Six

3. What does the 'Detect' column in the Oxford Dynamic EQ allow users to choose?

A. The way the plugin identifies the level of a signal

B. The type of filter to apply

C. The overall output level

D. The target gain for the band

4. What does the Attack parameter determine in the Oxford Dynamic EQ?

A. The overall output level

B. The resting gain of the equalizer band

C. The type of filter applied

D. How quickly the band approaches the target level

5. What is the purpose of the Irim control in the Oxford Dynamic EQ?

A. To adjust the Q of the bell filter

B. To set the offset gain

C. To adjust the overall output of the plugin

D. To choose the frequency bands

Pages 83-84 Questions:

1. Describe the approach Sonnox takes in designing the GUI for their Oxford Dynamic EQ compared to other dynamic EQs.

2. What types of filters does the Oxford Dynamic EQ provide, and how can they be applied to the frequency bands?

3. Explain how the plugin prevents over-processing of the audio signal.

4. What functionality does the headphone icon provide in the Oxford Dynamic EQ?

5. How do the Attack and Release parameters affect the dynamics processing in the Oxford Dynamic EQ?

Pages 84-85 true false

1. Recording a vocalist through a closely placed microphone can exaggerate sibilance associated with consonants like 's' and 'sh'.

2. Audio engineers should always increase the energy of the bands containing sibilant frequencies to avoid listener fatigue.

3. Some audio editors manually lessen the effect of harsh consonants through track automation or by cutting out sibilant moments.

4. Notched equalization is applied within the 4-10 kHz range to ease the stridency of problematic areas.

5. De-essers are specialized compressors designed to automate the process of reducing sibilance.

Pages 86-87 Questions:

1. Explain the basic operation of a de-esser and how it affects the vocal track.

2. What features does the Sonnox SuprEsser offer to audio editors for treating excessive sibilance?

3. Describe the function of the band-pass and band-reject filters in the Sonnox SuprEsser.

4. What are the three listening modes available in the Sonnox SuprEsser, and what do they do?

5. How does the de-esser ensure that listeners do not hear the filtered signal sent along the side chain?

Pages 86-87

1. What principle do de-essers work on?

A. Side chaining

B. Compression

C. Equalization

D. Reverb

2. What does the de-esser do to the original vocal track?

A. Enhances it

B. Increases volume

C. Adds reverb

D. Attenuates sibilance

3. What type of filters does the Sonnox SuprEsser use?

A. Low-pass and high-pass

B. Band-pass and band-reject

C. Notch and shelf

D. All-pass and comb

4. What does the Inside button do in the Sonnox SuprEsser?

A. Solos the original signal

B. Blends the two signals

C. Solos the output of the band-pass filter

D. Reveals the output of the band-reject filter

5. What is the purpose of the band-pass filter in the Sonnox SuprEsser?

A. To isolate problematic audio frequencies

B. To enhance the overall sound

C. To add effects

D. To compress the entire signal

Pages 87-88

1. What does the horizontal line at 9 indicate in the band-pass filter?

A. The peak level of the problematic sound

B. The threshold level

C. The center frequency of the band

D. The extent of gain reduction achieved

2. What does the gain-reduction meter show?

A. The input signal

B. The extent of gain reduction achieved

C. The peak level of sound

D. The center frequency of the band

3. How can the user set the upper and lower limits of the band-pass filter?

A. By dragging the vertical lines

B. By clicking on the graph

C. By adjusting the threshold level

D. By using the gain-reduction meter

4. What does the vertical line at 6 represent?

A. The threshold level

B. The peak level of sound

C. The frequency with the greatest energy

D. The extent of gain reduction

5. What happens when the automated threshold follows the general level?

A. Peak reductions remain the same

B. The gain-reduction meter resets

C. The band-pass filter is disabled

D. The input signal is muted

Chapter 9

Page 89-90 Figure 9.1

1. What do plugins of the reflection-simulation type primarily generate?

A. Spatial effects

B. Direct sound

C. Pre-delay

D. Decay time

2. What is pre-delay in the context of room reverberation?

A. The time gap between direct sound and processed sound

B. The time gap between early and late reflections

C. The time gap between direct sound and late reflections

D. The time gap between dry sound and wet sound

3. What do smaller intimate halls typically have for pre-delays?

A. 15-18 ms or less

B. 30-40 ms

C. 10-15 ms

D. 20-25 ms

4. What does Sonnox's Oxford Reverb allow users to set regarding the simulated room?

A. Shape and size

B. Only size

C. Only shape

D. Only decay time

5. What is the purpose of the pre-delay function in a plugin?

A. To define when reflections interact with direct sound

B. To adjust the decay time

C. To control the room size

D. To equalize the sound

*PAGE 90

1. What features does Sonnox's Oxford Reverb offer for controlling early reflections?

2. How does the 'Taper' control in Oxford Reverb affect the sound?

3. What is the purpose of the 'Absorption' control in the Oxford Reverb plugin?

4. What controls are available for shaping the reverb tail in Oxford Reverb?

5. How does increasing the 'Feedback' control in Oxford Reverb affect the sound?

Page 91 Figure.2

Questions:

1. What is the purpose of the EQ section in plugins like Oxford Reverb?

A. To enhance low frequencies

B. To emulate natural frequency response of physical spaces

C. To increase high-frequency content

D. To reduce overall volume

2. Why do rooms absorb high frequencies more easily than low frequencies?

A. Because high frequencies are louder

B. Because low frequencies travel further

C. Because of the physical properties of sound

D. Because high frequencies are less desirable

3. What effect does artificial reverberation have on a dry signal?

A. Makes it sound brighter

B. Makes it sound darker

C. Has no effect

D. Makes it sound more natural

4. What can be done to mitigate the effect of undesirable low-end content in reverb?

A. Increase high frequencies

B. Apply judiciously applied EQ

C. Add more reverb

D. Reduce the volume

5. What is one way to create greater warmth in a reverb sound?

A. Boost high frequencies

B. Apply a gentle boost to lower frequencies

C. Reduce the reverb time

D. Increase the dry signal level

*PAGE 91

1. What role does 'Overall Size' play in creating the aural image of space in reverberation?

2. How does 'Dispersion' affect the reflections in reverberation?

3. What is the effect of 'Phase Difference' on the stereo sound field?

4. Why is EQ used in reverberation plugins, and what is its effect on high frequencies?

5. What adjustments can be made to lower frequencies in reverberation to enhance warmth?

Page 92 Figure.3 True/False

1. Does the Nimbus GUI have three main panes?

2. The Pre-delay parameter in Nimbus helps to decrease the clarity of the signal.

3. The Low-Mid Balance dial adjusts the reverb time for lower frequencies only.

4. In larger spaces, reverberation lasts longer in lower frequencies according to the document.

5. Damping controls the way the highest frequencies are affected in the reverb.

Page 92-93 F Figure 9.4

1. What is the purpose of the Pre-delay in reverb settings?

2. How does the Reverb Size affect the sound of the reverb?

3. What does the Damping Frequency knob control in reverb settings?

4. What is the effect of the Width control on reverb?

5. What is the function of the Tail Suppress feature in reverb settings?

Page 94 Figure 9.6

1. What are the three general types of reverb that engineers can select from in the Attack pane?

2. How does the Diffuser Size knob affect the reverb settings?

3. What is the purpose of the Envelope Attack control in the plugin?

4. What does the Envelope Time control do in the plugin?

5. How does the Envelope Slope controller affect the signal as it enters the plugin?

Page 94 Figure 9.5 ( F 9.6)

1. What does the Output pane contain for controlling the reverb settings?

A. Dials for controlling level and EQ of early and late reflections

B. Only a selection of filters for input signal

C. A single dial for overall reverb control

D. A visual representation of sound waves

2. What types of reverb can engineers select in the Attack pane?

A. Plate, chamber, and hall

B. Room, hall, and echo

C. Chamber, echo, and delay

D. Plate, room, and chamber

3. What does the Diffuser Size knob model?

A. The dimensions of irregularities on reflective surfaces

B. The overall volume of the reverb

C. The type of reverb used

D. The speed of sound in the room

4. What does the Envelope Attack control?

A. The way the signal enters the plugin

B. The overall reverb time

C. The type of reflections

D. The frequency of the input signal

5. What effect does a gradual slope have on the Envelope Slope controller?

A. Filters the later energy quite strongly

B. Increases the early reflections

C. Decreases the overall reverb time

D. Has no effect on the signal

Page 95 Figure 9.7 True/False

1. The Early subpage button allows users to adjust parameters related to early reflections.

2. Smaller values on the Early Attack dial produce weaker early reflections.

3. The Early Time knob adjusts the length of time over which early reflections are spread.

4. The Early Slope dial models air absorption through a high-pass filter.

5. Early Pattern allows the user to choose between five distinct groupings of early reflections.

Page 96-97 Figure 9.8

1. What are the three main functions of the dials in the Warp pane?

2. How does the Threshold dial affect the compressor's processing?

3. What is the purpose of the Cut button in the Warp pane?

4. What does the Attack dial control in the compressor?

5. What options are available for changing the bit depth in the Warp pane?

Page 97-98 Figure 9.9 , 9.10 True/false

1. The Verb Session plugin's GUI is divided into three main areas.

2. The time-structure display shows the main parameters of reverberation.

3. The initial early reflections are represented by a group of horizontal bars.

4. The solidly colored area in the time-structure display depicts the dense wash of late reflections.

5. The plugin generates frequency bands that are shown by the decay curves in the time-structure display.

Page 99 Figure 9.11 True/False

1. Sonnox includes early-reflection controls for width, taper, feed along, feedback, and absorption.

2. The Width control in Sonnox affects the loudness of the reflections.

3. Absorption models the amount of high-frequency reduction that can occur in a room.

4. Reverb Time sets the length of time in seconds that it will take for the tail to fade to silence.

5. Diversity alters the width of the reverb's stereo image.

Page 99 Figure 9.11

1. What does the Room Size knob control in the plugin?

A. The volume of the room in cubic meters

B. The frequency of the audio

C. The gain of the early reflections

D. The length of the reverb tail

2. What is the suggested Pre-Delay value for the Musikvereinssaal?

A. 20.1 ms

B. 15 ms

C. 12 ms

D. 25 ms

3. What does the Decay Time knob specify?

A. The volume of the room

B. The length of the reverb tail

C. The frequency of the audio

D. The gain of the early reflections

4. What effect do longer pre-delays have on sound?

A. They make the sound louder

B. They help listeners distinguish between direct and reflected sound

C. They reduce the reverb effect

D. They increase the volume of the source

5. What is the default setting for the Damping sliders?

A. 50%

B. 75%

C. 100%

D. 125%

Page 100 Figure 9.12-13 True/false

1. Engineers can alter the tonal quality of the reverb through the filter pane in the upper right corner of the GUI.

2. The filter pane has only one adjustable band for frequency spectrum adjustments.

3. The Input slider determines the level of the signal that exits the plugin.

4. The Dry/Wet knob designates the mix of untreated and treated signal that leaves the plugin.

5. The default setting of the Dry/Wet knob is 50%.

Page 101 Figure 9.14

1. What type of controls does FabFilter focus on in Pro-R?

A. Technical controls

B. Non-technical controls

C. Complex controls

D. Basic controls

2. What does the Distance knob in Pro-R replicate?

A. The effect of moving closer or farther from the sound source

B. The effect of changing the reverb type

C. The effect of adjusting the mix level

D. The effect of altering the stereo width

3. What does the Brightness knob control in Pro-R?

A. The balance between high and low frequencies

B. The overall volume of the reverb

C. The decay rate of the reverb

D. The stereo width of the sound

4. What feature does Pro-R use instead of a crossover system for modifying decay rates?

A. Decay-rate EQ

B. Parametric EQ

C. Dynamic EQ

D. Static EQ

5. What does the Character control in Pro-R alter?

A. The style of the reverb

B. The overall volume of the reverb

C. The stereo width of the sound

D. The decay time of the reverb

Page 102 Figure 9.15-16

1. What is the principle behind convolution in reverb plugins?

2. How do engineers create impulse responses for convolution reverb?

3. What challenges do convolution reverbs face regarding impulse responses?

4. What unique approach does EastWest's Spaces reverb take in capturing impulse responses?

5. What controls do users have access to in the GUI of EastWest's Spaces II?

Page 102 Convolution

1. What does the term 'convolution' refer to in the context of audio processing?

A. The blending of one signal with another

B. The recording of sound in a studio

C. The process of deconvolution

D. The simulation of sound in a digital format

2. What is used to create impulse responses for reverb plugins?

A. A series of discrete measurements

B. Previously recorded impulse responses

C. Room reflections from an initial stimulus

D. A full-range frequency sweep

3. What is a common issue with convolution reverbs if impulse responses are not created carefully?

A. They can sound too natural

B. They can result in sterile simulations

C. They require less processing power

D. They do not use room reflections

4. How does EastWest's Spaces reverb differ from traditional convolution reverbs?

A. It uses generalized responses of a space

B. It focuses on specific instruments' reverberation characteristics

C. It does not require impulse responses

D. It eliminates the need for mathematical calculations

5. What can users adjust in the GUI of Spaces II?

A. The type of audio input

B. The length of the pre-delay

C. The number of impulse responses

D. The type of room reflections

Chapter 10

Page 105 true false

1. A container holds digital audio information along with metadata.

2. Lossless codecs achieve data compression by removing information that humans do not hear well.

3. WAV and AIFF are both standard uncompressed file types for audio.

4. FLAC is a lossy audio codec that compresses data without any loss of audio quality.

5. ALAC uses an m4p audio-only container and compresses audio data with no loss of information.

Page 106 true false

1. The mp3 codec was developed by the Fraunhofer Institute in collaboration with other institutions.

2. AAC is a replacement for the mp3 codec and was launched by Apple in 2003.

3. Vorbis is a patented codec that is used in the Ogg container format.

4. The mp3 codec compresses audio data to occupy about 50% of the original file's storage space.

5. AAC and ALAC files both use the extension m4a.

Page 105

1. What is the primary function of a container in digital audio files?

2. What are the two types of compression methods used by codecs?

3. What is the difference between FLAC and ALAC in terms of their container formats?

4. What is the significance of the Free Lossless Audio Codec (FLAC) in the audio industry?

5. What are the two standard uncompressed file types available to recordists?

Page 107

1. What percentage of the original file's storage space does an mp3 typically occupy?

A. 5%-6%

B. 9%-10%

C. 15%-20%

D. 25%-30%

2. In what year was the mp3 codec named?

A. 1989

B. 1991

C. 1995

D. 2003

3. What is the main problem with the perceptual coding used to create an mp3?

A. Loss of sound quality

B. Increased file size

C. Compatibility issues

D. Limited playback devices

4. What audio container does AAC employ?

A. m4a

B. mp3

C. wav

D. m4p

5. Which organization created the Vorbis codec?

A. Fraunhofer Institute

B. Xiph.Org Foundation

C. Apple Inc.

D. Moving Picture Expert Group

Page 107-108 True /false

1. The International Telecommunication Union (ITU) introduced algorithms for measuring loudness in 2006.

2. The European Broadcasting Union (EBU) proposed a metering system four years after the ITU introduced its algorithms.

3. The EBU recommended that the loudness level should be constant and uniform within a program.

4. Loudness normalization ensures that the average loudness of all programs is the same.

5. The ITU suggests that subjective loudness is not important to the music industry.

Page 107

1. What are the file sizes of the original WAV file and the converted WAV file according to Table 10.1?

2. What is the definition of loudness as defined by the Advanced Television Systems Committee (ATSC) in 2013?

3. What changes did the International Telecommunication Union (ITU) introduce in 2006 regarding loudness measurement?

4. What was the purpose of the European Broadcasting Union's (EBU) proposal four years after the ITU's algorithms were made available?

5. What types of audio files were produced for Monteverdi's 'Si dolce e'I toronto' and who produced them?

Page 108-109

1. What are the three time scales used in digital meters that conform to the EBU recommendations, and what do they measure?

2. What is the purpose of the Loudness Range (LRA) in digital meters, and how is it calculated?

3. Explain the concept of K-weighting and its significance in audio metering.

4. What are the two varieties of relative scales mentioned in the document, and how do they differ?

5. What does the absolute scale in digital meters show to recordists, and what is its target level?

Page 112 true/false

1. The EBU suggests an integrated loudness level of -23.0 LUFS.

2. The maximum permitted true peak level according to the EBU is -2.0 dB.

3. The AES promotes a target of -16.0 LUFS for streaming platforms.

4. The normalization targets for streaming platforms are lower than those for broadcast standards.

5. In the USA, the integrated loudness target is -24.0 LUFS with a tolerance of 2.0 dB.

Page 107-108

1. What organization introduced algorithms for measuring perceived and peak levels of digital audio signals in 2006?

A. European Broadcasting Union (EBU)

B. International Telecommunication Union (ITU)

C. American National Standards Institute (ANSI)

D. Institute of Electrical and Electronics Engineers (IEEE)

2. What is the maximum momentary loudness based on?

A. A sliding window of 1 second

B. A sliding window of 400 milliseconds

C. A fixed time interval of 3 seconds

D. An average of the entire signal

3. What does loudness normalization ensure according to the EBU?

A. The loudness level is constant throughout a program

B. The average loudness of all programs is the same

C. The loudness level varies significantly within a program

D. The peak loudness is always the highest

4. What is the purpose of the maximum true-peak level descriptor?

A. To measure the average loudness of a signal

B. To comply with the technical limits of digital systems

C. To assess the artistic quality of audio

D. To determine the overall energy of a program

5. Which industry has adopted the EBU standards for broadcast?

A. Film industry

B. Music industry

C. Television broadcasting

D. Radio broadcasting

Chapter 11

Page 125 Questions:

1. What are the two main approaches engineers take when recording the sound of a grand piano?

2. How does the raised lid of a grand piano affect the sound frequencies?

3. What is the significance of microphone placement in relation to the piano's sound quality?

4. What frequency range do engineers need to consider when selecting microphones for recording a grand piano?

5. Why might engineers prefer unidirectional microphones when recording a grand piano?

Page 125

1. What is the primary goal of recordists when setting up microphones for a grand piano?

A. To capture a balanced sound

B. To record only high frequencies

C. To focus on low frequencies

D. To eliminate all reflections

2. Where do engineers typically locate the higher frequencies in a stereo recording of a grand piano?

A. On the right side of the playback image

B. In the center of the stereo image

C. On the left side of the playback image

D. Behind the piano

3. What effect does the raised lid of a grand piano have on sound frequencies?

A. It amplifies lower frequencies

B. It reflects mid and high frequencies better

C. It has no effect on sound frequencies

D. It only affects the soundboard

4. What is a common issue when placing a piano too close to a wall?

A. It enhances the sound quality

B. It creates unwelcome early reflections

C. It boosts high frequencies

D. It eliminates phase anomalies

5. Which type of microphone do engineers often choose to achieve a uniform tonal quality across the piano's frequency spectrum?

A. Omnidirectional microphones

B. Unidirectional microphones

C. Dynamic microphones

D. Condenser microphones

Pages 125-126 Questions:

1. What are the three factors that engineers consider when deciding on the location for stereo miking of a grand piano?

2. What is the typical distance range for an ORTF pair of microphones from the piano?

3. Describe the A-B spaced pair technique and its typical microphone spacing.

4. What is the purpose of the mid-side coincident pair technique in recording?

5. How does the placement of microphones affect the sound captured from the piano?

Pages 125-126 True/false

1. Engineers consider tonal balance, direct to ambient sound ratio, and stereo image when deciding on microphone placement for recording a grand piano.

2. An ORTF pair of microphones should be placed at least 4 meters away from the piano.

3. The A-B spaced pair technique typically uses directional microphones.

4. The mid-side coincident pair technique allows for adjustment of the stereo image after tracking has been completed.

5. Moving microphones from the tail to the front of the piano decreases mid- and high-frequency information captured.

Page 127-128 Questions:

1. What is the purpose of placing microphones inside the piano when recording in unfavorable room acoustics?

2. What is the recommended height for positioning microphones above the strings to capture a broad spectrum of sound?

3. How do engineers typically position microphones in a spaced pair configuration for recording piano?

4. What is the effect of using coincident pairs of microphones positioned over the hammers of the piano?

5. What is the advantage of using a quasi-ORTF array with cardioid microphones in piano recording?

Pages 127-128

1. What is the main reason engineers place microphones inside the piano when recording?

A. To enhance the room acoustics

B. To minimize room reverberation effects

C. To capture external sounds

D. To increase microphone sensitivity

2. What is the recommended distance for positioning microphones above the strings to capture sound effectively?

A. 10 to 15 centimeters

B. 20 to 25 centimeters

C. 30 to 35 centimeters

D. 40 to 45 centimeters

3. In a spaced pair configuration, where is one microphone typically placed?

A. Over the bass strings

B. Over the treble strings

C. Under the piano

D. At the back of the piano

4. What is the effect of positioning coincident pairs of microphones directly over the hammers?

A. It produces a warmer sound

B. It produces a brighter, more percussive sound

C. It reduces background noise

D. It captures only low frequencies

5. What is a benefit of using a quasi-ORTF array with cardioid microphones?

A. It captures only high frequencies

B. It provides uneven sound coverage

C. It yields an acceptable sound with even frequency coverage

D. It requires more microphones

Chapter 12

Pages 129-130

1. What do many singers prefer regarding their voices before microphones capture the sound?

A. To develop in the room

B. To be recorded immediately

C. To use only spot microphones

D. To avoid any reverberation

2. What is a common strategy for microphone placement when the stage is wide enough?

A. Pointing the piano towards the audience

B. Pointing the piano towards the rear of the stage

C. Placing the singer behind the piano

D. Using only unidirectional microphones

3. What is the purpose of placing the vocalist's microphones behind the music stand?

A. To capture more ambient sound

B. To prevent reflections from entering the microphones

C. To increase the volume of the singer

D. To reduce the distance from the singer

4. What is the recommended distance for placing cardioid spot microphones from the singer?

A. 30 to 50 centimeters

B. 60 to 100 centimeters

C. 1 to 2 meters

D. 2 to 3 feet

5. What do engineers regularly employ in the method involving the piano and singer?

A. Cardioid microphones only

B. Stereo pairs of omnidirectional microphones

C. Only unidirectional microphones

D. Dynamic microphones only

Pages 129-130 Questions

1. What is the significance of the critical distance in microphone placement for recording singers and pianists?

2. How do engineers typically position the singer and piano to minimize microphone bleed?

3. What is the role of the music stand in relation to the vocalist's microphones?

4. What is the rationale behind using a spot microphone in conjunction with a main stereo pair?

5. What adjustments do recordists make to find the ideal microphone placement for the A-B pair of omnidirectional microphones?

Pages 130-131